Everyone makes mistakes during data analysis. Literally everyone. The question is not what errors you make, it's what systems you put into place to prevent them from happening. Here are mine. [a thread because I'm sad to miss #SIPS2018]

External Tweet loading...

If nothing shows, it may have been deleted

by @siminevazire view original on Twitter

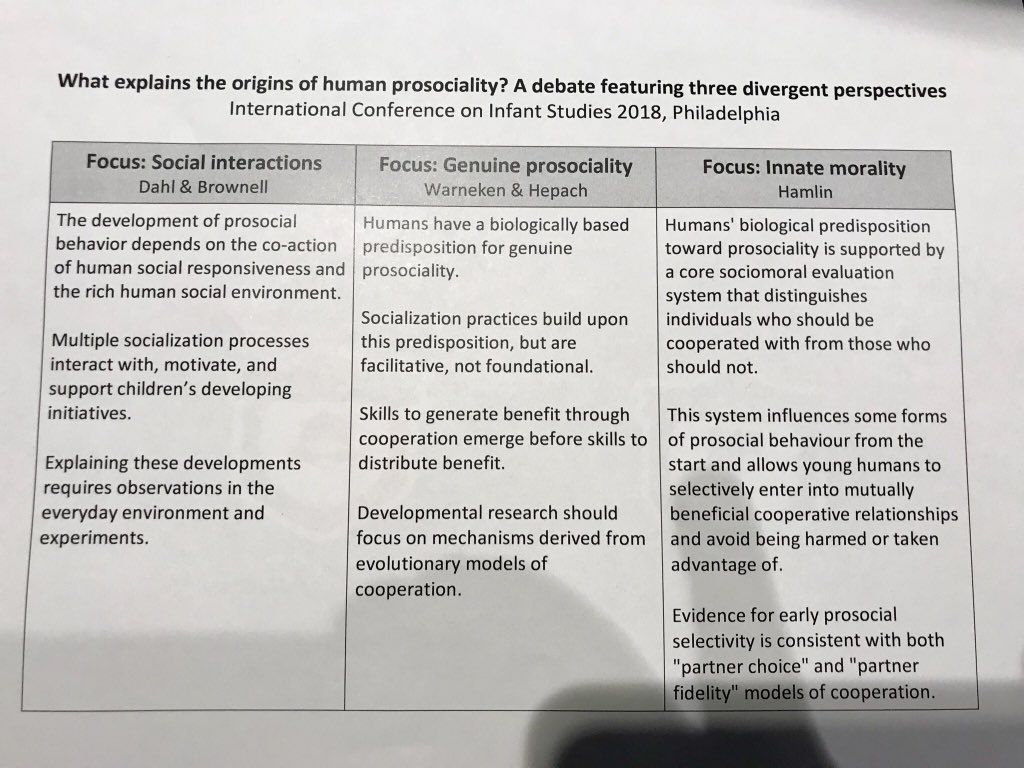

A big wakeup call for me was an errror I made in this paper: langcog.stanford.edu/papers/FSMJ-de…. Figure 1 is just obviously wrong in a way that I or my co-authors or the reviewers should have spotted. Yet we all missed it completely. Here's the erratum.

onlinelibrary.wiley.com/doi/abs/10.111…

onlinelibrary.wiley.com/doi/abs/10.111…

Since then, we've audited dozens of papers (I like this term much more than "data thugged" @jamesheathers). E.g. in @Tom_Hardwicke's new manuscript: osf.io/preprints/bits…. Summary: the error rate is very high. Most errors don't undermine papers, but most papers have errors.

So what do we do? 1) Literate programming. If you have to write code that others can read, you catch typos and errors much more quickly. And if you're not scripting your data analysis using code, it's time to start.

babieslearninglanguage.blogspot.com/2015/11/preven…

babieslearninglanguage.blogspot.com/2015/11/preven…

2) Standardize your workflow. If you do things consistently, you will be less likely to make new, ad-hoc errors that you don't recognize. For me this has meant learning tidyverse.org - an amazing ecosystem for R data analysis.

My tutorial: github.com/mcfrank/tidyve….

My tutorial: github.com/mcfrank/tidyve….

3) Version control. osf.io is a gateway. git and github.com take time to learn but are far better tools for collaborating. If you track what you did you will not lose files/erase work/accidentally modify data, blocking a whole set of possible errors!

4) Code review. I wish we did this more. But co-working (babieslearninglanguage.blogspot.com/2017/11/co-wor…) and pair-programming can help catch errors and promote clean, standardized analysis. And you can always ask a friend to "co-pilot" after you are done.

5) Openness by default. If you curate and analyze data as though someone who thinks you are wrong is watching you and criticizing, you will be much more careful. This is a very good thing. More thoughts in our transparency guide: psyarxiv.com/rtygm.

In sum: of course we should be afraid of errors! But don't *just* be afraid. Accept that you *have* made errors - and will make more. We all do. Act to put systems in place that help you catch and correct errors before they enter the literature. [end]

• • •

Missing some Tweet in this thread? You can try to

force a refresh