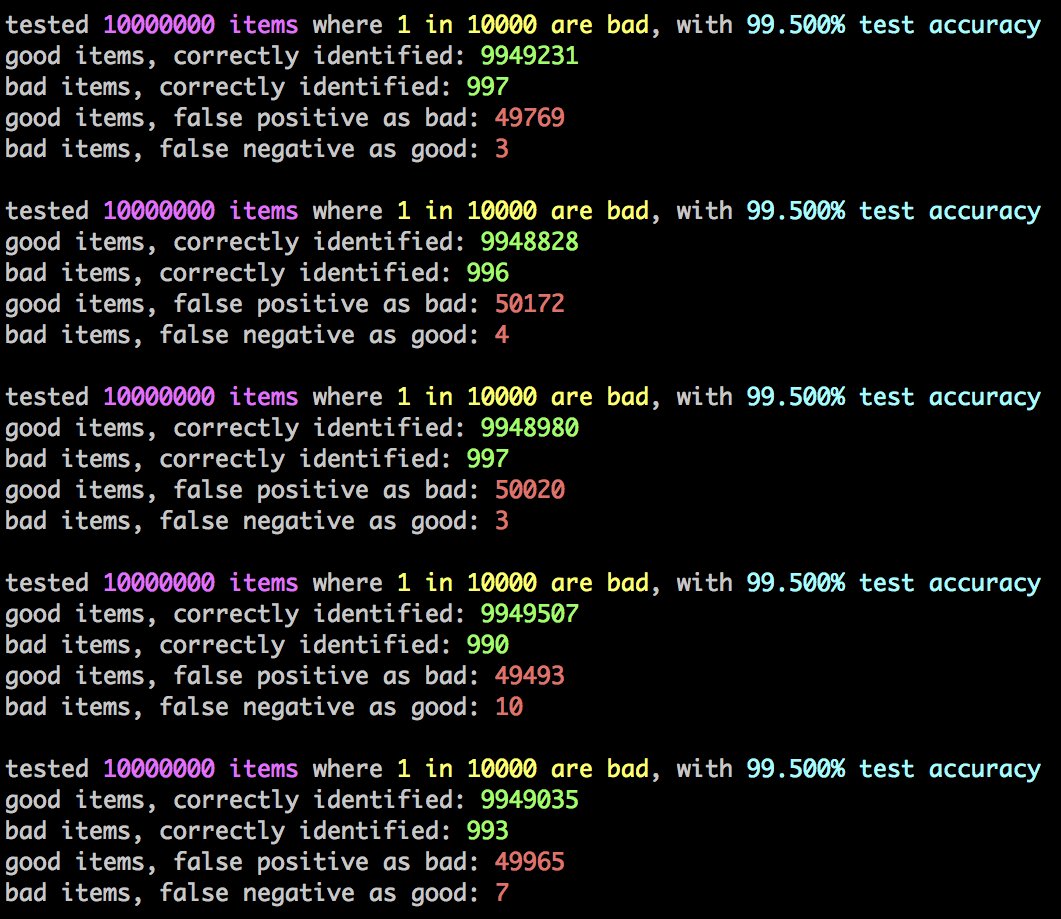

Regards #Article13, I wrote up a little command-line false-positive emulator; it tests 10 million events with a test (for copyrighted material, abusive material, whatever) that is 99.5% accurate, with a rate of 1-in-10,000 items actually being bad.

For that scenario - all of which inputs are tuneable - you can see that we'd typically be making about 50,000 people very upset, by miscategorising them as copyright thieves or perpetrators of abuse:

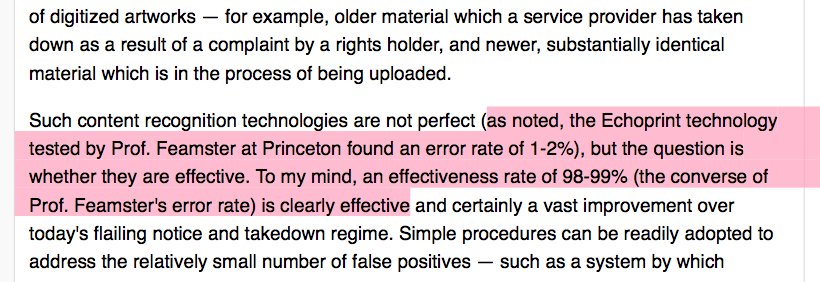

But let's vary the stats: @neilturkewitz is pushing a 2017 post by very respected fellow geek and expert @paulvixie in which Paul speaks encouragingly about a 1-to-2% error rate; let's split the difference, use 1.5% errors, ie: 98.5% accuracy: circleid.com/posts/20170420…

Leaving everything else the same, we have now tripled the number of innocent people that we annoy with our filtering, raising it to ~150,000 daily; in exchange we stop about 990 badnesses per day.

Let's be blunt: We make victims of, or annoy, about 150-thousand people each day, in order to prevent less than 1000 infringements, if we use these numbers. The only other number that we can mess with "is the rate of badness", because the number of uploads is what defines "scale"

So let's do that: let's assume that the problem (eg: copyright infringement) is very much WORSE than 1-upload-in-10,000; instead let's make it 1 in 500. What happens? This is what happens: you still upset 150,000 people, but you catch nearly 20,000 infringements.

If 1 in every 500 uploads are badness (infringing, whatever) then you annoy 150,000 people for every 20,000 uploads you prevent; that's still 7.5 people you annoy for every copyright infringement that you prevent. BUT what if the problem is LESS BAD than 1 in 10,000 ?

I was at a museum yesterday, and I uploaded more than 50 pictures which (as a private individual) I'm free to share; the vast majority of uploads to Facebook by its 2 billion users will be "original content" of varying forms, stuff that only the account-holder really cares about.

So let's go with an entirely arbitrary guess of 1-in-33,333 rate of badness - that amongst every 33,333 pictures of hipsters vomiting, of "look at this sandwich" and of "here's my cute cat", there's only 1 copyrighted work. What then?

What happens is that you still piss-off 150,000 people, but your returns are really low - you prevent only about 300 badnesses in exchange; at which point you really have to start asking about the cost/benefit ratios.

If you want to play with the code: github.com/alecmuffett/ra…

If you want to play with the code: github.com/alecmuffett/ra…

This sort of math might be useful to @Senficon, I suppose, especially in relation to the thread at

https://twitter.com/AlecMuffett/status/1015507133838786560

I would _REALLY_ love to have some little javascript toy with a slider for test accuracy, some input box for 1-in-N badness rate, and then have the four buckets broken out for visualisation; but I am a backend coder and my JS-fu is weak.

And in case it's not obvious, I take issue with @paulvixie's somewhat glib assertion that "Simple procedures can be readily adopted to address the relatively small number of false positives" - for the reasons that I demonstrate above, & also in this essay: medium.com/@alecmuffett/a…

One last little addendum: let's go back to a badness rate of 1-in-10000, but let's drop the test accuracy to a more plausible 90%, what happens? Answer: you piss off nearly 1 million people per day, to prevent about 900 infringements.

Have a nice saturday!

Have a nice saturday!

This thread has been nicely unrolled at threadreaderapp.com/thread/1015594… for easier reading in web browsers.

This is a cute way to phrase some of the results:

https://twitter.com/compbiobryan/status/1015625001511383040

Revisiting the above: let's assume the test is has a Vixie-like accuracy of 98.5% & that BADNESS IS REALLY PREVALENT: 1 in every 500 uploads are bad.

What happens each day?

- you annoy 150,000 innocents

- & stop 17900 badnesses

- 300 badnesses SURVIVE THE FILTER

Is this good?

What happens each day?

- you annoy 150,000 innocents

- & stop 17900 badnesses

- 300 badnesses SURVIVE THE FILTER

Is this good?

HYPOTHETICAL: How much badness do you need, with a 98.5%-accurate test, for the False-Positive-Rate (loss) to EQUAL the Block-Rate (gain)?

Answer: about 1 in 67 postings have to be "bad" in order to break even (ignoring costs of overhead, power, CPU, etc)

Answer: about 1 in 67 postings have to be "bad" in order to break even (ignoring costs of overhead, power, CPU, etc)

…at that point you are making as many bad guys unhappy, as good guys.

Probably, nobody is happy.

Probably, nobody is happy.

HYPOTHETICAL #2 — "…but Alec, what if you are a smaller content-sharing platform and only get 10,000 new pieces of content per day?"

Anwers: at 98.5% accuracy you will piss-off about 140 to 180 users per day; results shown with badness rates of 1:500 and 1:10000 for comparison

Anwers: at 98.5% accuracy you will piss-off about 140 to 180 users per day; results shown with badness rates of 1:500 and 1:10000 for comparison

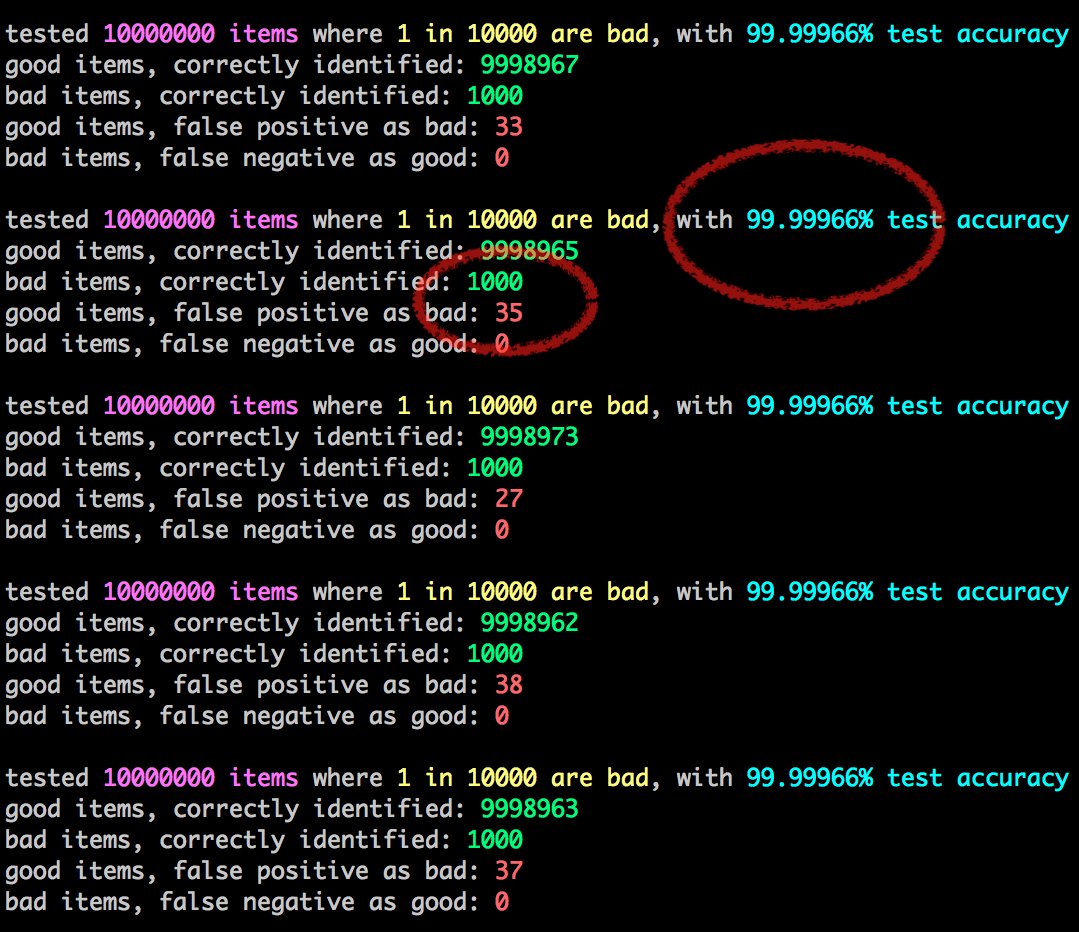

HYPOTHETICAL #3— If you're in business, you've probably heard of "Six Sigma", a metric of quality; a friend suggested that I model it, & at SixSigma the costs of Filtering & False Positives are CLEARLY BEARABLE: about 34 FALSE POSITIVES IN 10 MILLION UPLOADS. There's one problem:

Six Sigma MEANS 99.99966% ACCURACY - ie: that the copyright-identification engine can only make 3.4 mistakes per million tests; which nicely multiplies-out in the example below. It's never going to happen in real life.

en.wikipedia.org/wiki/Six_Sigma

en.wikipedia.org/wiki/Six_Sigma

With these same numbers (1:10,000 badness, 10M uploads) a test which is merely 99.99% accurate (a little over 5-Sigma) then you approximately have as many false-positives as badness, a 50-50 ratio:

• • •

Missing some Tweet in this thread? You can try to

force a refresh