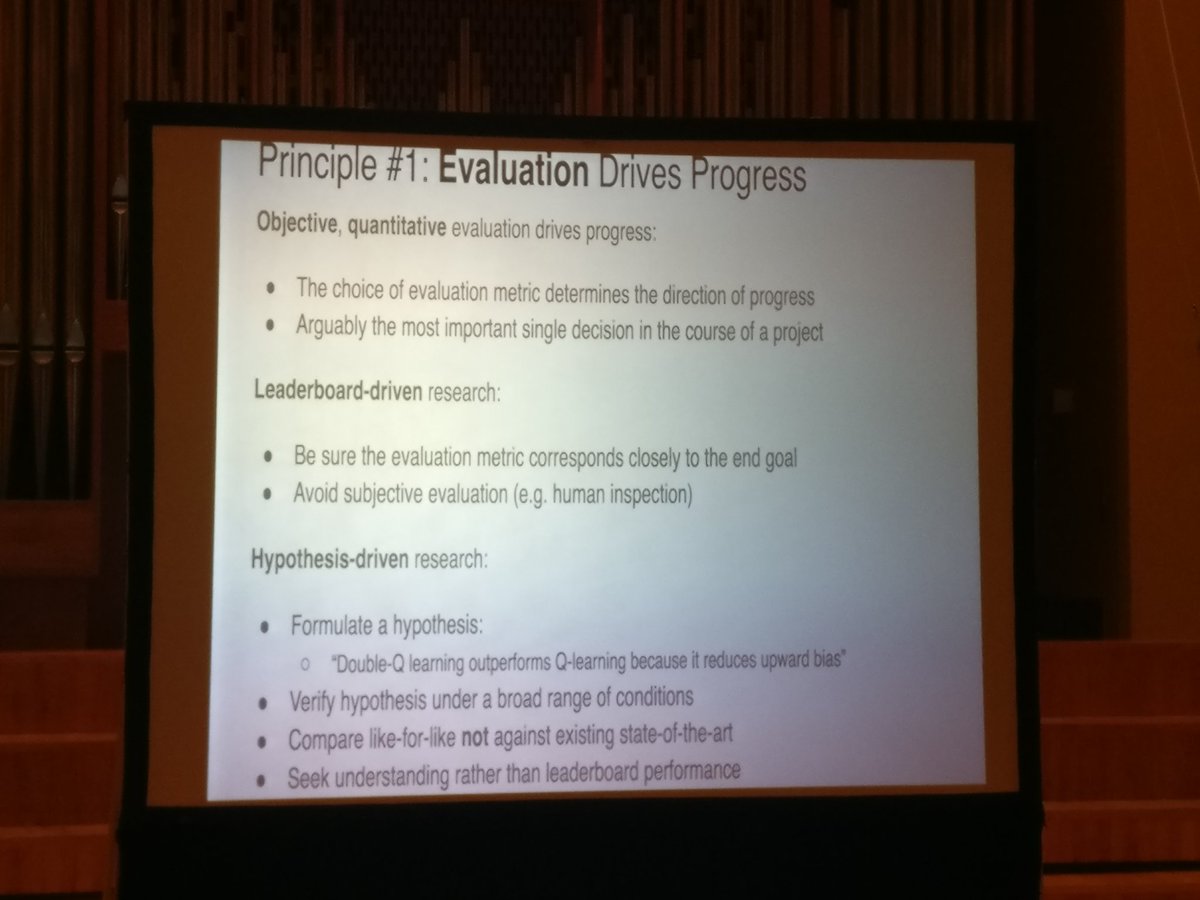

David Silver on Principles for Reinforcement Learning at the #DLIndaba2018. Important principles that are not only applicable to RL, but to ML research in general. E.g. leaderboard-driven research vs. hypothesis-driven research (see the slides below).

Principle 2. How an algorithm scales is more important than its starting point. Avoid performance ceilings. Deep Learning is successful because it scales so effectively.

Principles are meant to be controversial. I would argue that sample efficiency is at least as important.

Principles are meant to be controversial. I would argue that sample efficiency is at least as important.

Principle 3. Generality (how your algorithm performs on other tasks) is super important. Key is to design a diverse set of challenging tasks.

This. We should evaluate on out of distribution data and new tasks.

This. We should evaluate on out of distribution data and new tasks.

Principle 4. Use agent's experience rather than human expertise. Don't rely on engineered features or heuristics.

Hmm. Maybe true in the setting where you can sample an infinite number of experiences but domain expertise and inductive biases are important when data is limited.

Hmm. Maybe true in the setting where you can sample an infinite number of experiences but domain expertise and inductive biases are important when data is limited.

Principle 5. State should be built as the state of the model, i.e. an RNN's hidden state and not defined in terms of environment. No constraint on state. Only agent's subjective view of world matters. Should not reason about external reality, which is limiting.

Principle 6. Agents influence the stream of data and experiences. Agents should have access to features to control steam stream of environment. Focus should not just be on maximizing reward but also building control of the stream.

Principle 7. Value functions efficiently summarize the state of the world and the future. Multiple value functions allow us to model multiple aspects of the world. Can help to control the stream.

Principle 8. Imagining the future (what will happen next) can be used for planning. Same RL algorithms can be applied to learn from imagined experience (as in Alphago using MCTS and the value function).

Principle 9. Leverage strong function approximators. Algorithmic complexity can be pushed into neural network architecture (even MCTS, hierarchical control, etc. can be modeled with a NN).

This necessitates more tools to understand what our models actually learn.

This necessitates more tools to understand what our models actually learn.

Principle 10. Meta learning is the way to go. Not even the architecture is handcrafted anymore. Everything is learned end to end. The neural network takes care of everything with as little human input as possible.

Inductive bias should still be useful, though.

Inductive bias should still be useful, though.

• • •

Missing some Tweet in this thread? You can try to

force a refresh