Excited for @katecrawford 's talk "AI Now and the Politics of Classification"

Will try to live tweet, but if I can't keep up, the good news is that @rctatman is sitting right next to me, and I'm sure she'll be on it :)

Will try to live tweet, but if I can't keep up, the good news is that @rctatman is sitting right next to me, and I'm sure she'll be on it :)

has been studying social implications of large-scale data, machine learning, and AI and sees us now reaching an inflection point where these technologies are now touching many aspects of our lives: health care, immigration, policing.

: What does AI mean? So much hype, need to define our terms.

Notes major changes in the last 6 years: cheap computing power, changes in algorithms, and vast pipeline of data.

Notes major changes in the last 6 years: cheap computing power, changes in algorithms, and vast pipeline of data.

: When people talk about, they're talking about a constellation of techniques, currently dominated by ML.

: But it's not just techniques. It's also social practices. AI is both technical & social. Current rise as important as rise of computing and advent of mass media.

: Bias and unfair decision making happening all across: vision, #nlproc, and other.

How? We are training contemporary AI systems to see through the lens of our own histories.

How? We are training contemporary AI systems to see through the lens of our own histories.

: Slide full of instances of high profile bias in AI. But they're not new --- amplified versions of existing problems.

: Google's Culture & Arts app was heavily skewed towards Western art by white male artists. Women & POC get much more stereotyped representations.

: Image search for 'CEO' -- white dudes in suits. First woman to show up: CEO Barbie. (As of when she ran this test.)

(#livetweeting problems: language about gender is very binary, not how I would say it. Also "women & POC" erases WOC...)

asks who's heard of a story like this. Most (all?) hands in the auditorium go up. Hooray for awareness of bias in AI. Not just this audience ... but also major players in industry.

But: "Science may have cured biased AI" headline... *sigh*

But: "Science may have cured biased AI" headline... *sigh*

"Data can be neutralized" is the new "Data is neutral"

: Bias in systems most commonly caused by bias in data, but data can only be gathered about the world that we have---with its long history of bias and discrimination.

: If we don't start contending with this, we're going to make it worse. Because structural bias is inherently social.

: Bias has contradictory meanings, if you're coming from ML, law, social science.

14c: Oblique line

16c: Unfair treatment

20c: Technical meaning in statistics ... ported into ML

14c: Oblique line

16c: Unfair treatment

20c: Technical meaning in statistics ... ported into ML

: In law, bias means a judgment based on preconceived notions or prejudices, rather than impartial consideration of the facts.

: A completely unbiased system in the ML sense can be biased in the legal sense.

But we have to work across disciplines if we're going to solve these problems.

But we have to work across disciplines if we're going to solve these problems.

: Ex is stop & frisk. System can't think beyond its own givens. Bring in other disciplines & learn about history of biased policing --- and get a different perspective on how to build the model.

: Humanities & social sciences critical in avoiding essentializing social constructs like "criminality".

: Forthcoming paper overviews CS literature on bias. Majority of it understands bias as harms of allocation. When a system allocates or withholds resources/opportunities from certain groups Economic view.

: But what about systems that don't allocate resources? Then we see representational harms. e.g. Latanya Sweeney's study of discrimination in ad delivery dataprivacylab.org/projects/onlin…

: Harms aren't just downstream (effect on job prospects) but also upstream. Stereotyped representations are bad in themselves.

: Need to consider how ML is creating representations of human identity.

Allocation is getting all the attention because it's immediate. Representation is longterm, affecting attitudes & beliefs.

Allocation also much easier to quantify.

Allocation is getting all the attention because it's immediate. Representation is longterm, affecting attitudes & beliefs.

Allocation also much easier to quantify.

: Suggests that representational harms are actually at the root of all of the others.

: Two big papers last year on sexist associations in word embeddings. (Bolukbasi et al, and Bryson & co-author.)

Word embeddings aren't just for analogies. Also used across lots of apps, like MT.

Word embeddings aren't just for analogies. Also used across lots of apps, like MT.

: Harms of recognition. Recognition isn't just about downstream effects, but also about recognizing someone's humanity. If that's erased by a system...

: Denigration --- classic example is Google photos / gorillas example.

Being able to understand that problem requires understanding history & culture. Need people in the room who can call it out and say this has to change.

Being able to understand that problem requires understanding history & culture. Need people in the room who can call it out and say this has to change.

: Underrepresentation -- gender skewed professions are even more gender skewed in image search results.

: The many more than $1 million question --- what should we do about it?

: If we try to make the data neutral ... who's idea of neutrality? How do you account for history of 100s of discrimination?

: Do you reflect current state of affairs, or try to reflect what you (who?) would like to see.

: Both of those are interventions and thus political.

Need to think more deeply about what is being optimized for in the ML set up.

Need to think more deeply about what is being optimized for in the ML set up.

: What if focus on making ML systems more fair is preventing us from looking at a more fundamental issue, like how to reform the criminal justice system, or cash bail?

: Technical internventions are worth working on, but we need more too to solve this.

Need a different theoretical tool kit.

Need a different theoretical tool kit.

: Mostly think of bias as a pathology that keeps coming up and could be fixed. But what if it's an inherent feature of classification?

: AI has supercharged our ability to classify the world we live in. Classification is at the heart of AI today --- grouping text, classifying images as faces, putting people into credit pools.

: But we need to consider what is at stake when we classify people.

1. Classification is always a product of its time

2. We're currently living through the biggest experiment on classification in human history

1. Classification is always a product of its time

2. We're currently living through the biggest experiment on classification in human history

: "Go all the way back to the beginning ... Aristotle." Aristotle's work on classifying the natural world now seems like common sense.

[EMB: I wish we could get away from framing Aristotle as "the" beginning.]

[EMB: I wish we could get away from framing Aristotle as "the" beginning.]

: conlang example with 11 genders from 1653.

: Facebook currently lists 56 answers to "Gender?" field.

: But Facebook could have just given a freetext field. Or could have left out the question. Each of these choices has lasting implications & social effects

: Labeled Faces in the Wild benchmark dataset --- mostly male, mostly white.

Most common person in it: George W. Bush

b/c it was made by scraping Yahoo! news images from 2002.

Most common person in it: George W. Bush

b/c it was made by scraping Yahoo! news images from 2002.

: 530 / 13000 images are of W

: Great reminder that these datasets reflect both culture & hierarchy of the world they come from.

Implications of choice of using e.g. news photography...

Implications of choice of using e.g. news photography...

: For current ML training data sets, there are "people", "things", "ideas". ... nice family tree & classification.

: Another case in point of classifications with lasting, harmful effects: DSM II.

: It was just two years ago that the Dewey Decimal system moved homosexuality out of "mental derangements".

Classificatory harm.

Classificatory harm.

: The harms persist even after the labels are removed.

: Onto the infamous AI gaydar paper. Beyond the critiques ... what about the ethics of classification? Consider countries which still criminalize homosexuality, but also this reifies and essentializes cultural and complex social categories.

: "Book of Life" in apartheid South Africa determined where you can live, what kind of job you can have etc. Once again fluidity is lost.

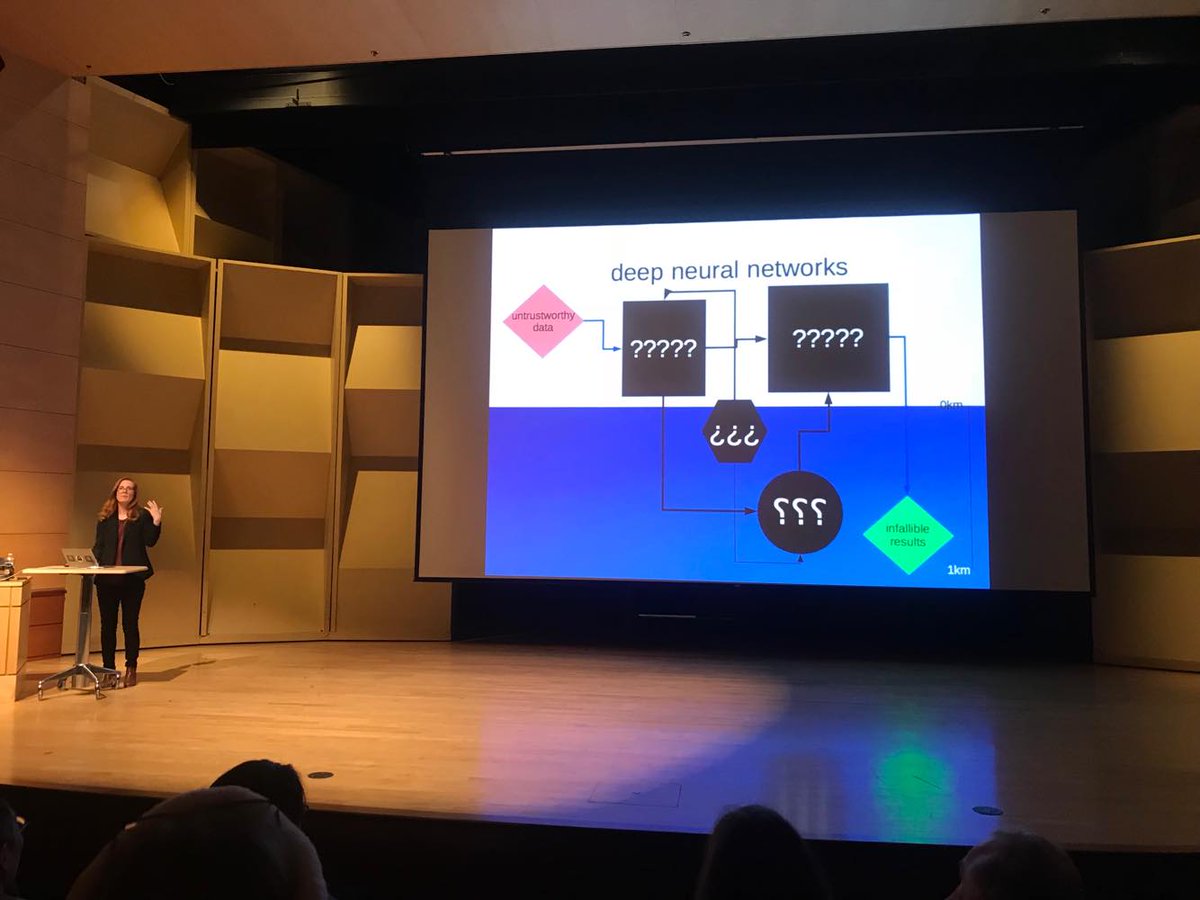

: 2016 Wu & Zhang paper ... deep neural nets: input untrustworthy data -> ??? -> infallible results.

: Not free of bias, just bias hardcoded. Modern physiognomy.

And there's currently start ups making money on this (?!) e.g. Faceception.

And there's currently start ups making money on this (?!) e.g. Faceception.

(I was looking for a ref to @mmitchell_ai 's work there, but there wasn't one.)

: So what can we do? How can we ensure that AI doesn't reproduce the damaging effects of classification systems in the past?

: Fairness forensics. Pre-release trials, monitoring of systems in use, standards for the life cycles of data sets.

: Algorithm impact assessments. Transparency about algorithmic systems in use.

: Connect across fields. We definitely need interdisciplinarity. Prioritize diversity and inclusion across the board. Those working on high stakes system need to work with those with domain expertise & especially those with connections to affected communities.

: AI NOW set up to be an interdisciplinary hub that can be a home for those kinds of studies.

: Urgent problem is to understand the social implications of AI.

: Finally, the ethics of classifying. Which tools should we not build?

: As the tech industry seeks to professionalize fairness and hire people fix these problems in house, hope that they'll start to look at the broader harms of allocating people to reified identity categories.

: Rapid expansion of AI is seen as inevitable, and the best we can do is to tame it by creating some zones of exclusions. But what is we go the other way and ask what type of world we want to live in, and then how does technology serve that vision?

: What kinds of power do our existing technologies amplify, and do we want that? Remember it's all driven by humans -- we collectively have the power to resist & shape the world we want to live in.

Q&A time.

Q: Final decision v. proxy decision --- intermediate steps are what causes the harm. Maybe we can go directly from data to decision?

A: Really -- can you think of any cases where we go from data to decision without someone designing how that's done?

Q: Final decision v. proxy decision --- intermediate steps are what causes the harm. Maybe we can go directly from data to decision?

A: Really -- can you think of any cases where we go from data to decision without someone designing how that's done?

Q: Can't get away from the classification of give them the pill or not -- try to skip the classification of depressed or not.

A: How do we do that?

Q: By getting enough data.

A: How do we do that?

Q: By getting enough data.

A: If you have perfect surveillance you could do that. Wouldn't need heuristic. Is that the world you want to live in?

Q: Given geographic context of where AI is being addressed, your ex about classifications also Western based, but AI systems not only Western based --- oftentimes backed by gov'ts with very different views of the world.

A: Several of the examples I've used tonight were not from the US / the West. Bigger, important question --- enormous geopolitical rift between US, China & rest of world, construed as a series of client states.

A: China -- we plan to lead the world in AI by 2030, directing universities & companies to do xyz. Don't like how this is portrayed as China is the bad guy. Also in the US. Facebook patent on getting loans based on friends...

A: There are differences, but there are shared concerns too.

Q: I'm also worried about the money is power thing. The folks who are currently benefitting from the classification systems have the money & power to say we want more $.

Q: Outside of academia where we pursue lofty goals for less money, how do we convince folks to give up power & benefits from this unfair system?

A: Great question. Major feeding frenzy right now where industry is hiring people out of academia. And especially concerning because the industry is so concentrated. Academia: We'll pay you less, you won't have the big data sets, and hey all of your colleagues have left.

A: Need to think about what academia does --- this is why we created AI NOW, because academia is the best place to look at these things. But it's going to be hard, and it's important not to gloss over the concentration of money & power in a small set of industrial spaces.

Q: The people who have the resources to work on this are the ones who are doing it. What can we do about that?

A: Regulation?

A: Regulation?

A: Can go into history of corporations getting status of persons and having the prime directive to maximize shareholder value. Difficult to get them to optimize otherwise, unless you have things like social shaming that would make people stop using the services

A: but the other thing is regulation, which we're seeing not in the US but in Europe with the general data protection guidelines, coming online in May.

A: Another discussion about whether those will work. Industry needs to show that they are working on best practices for well being & committed to them even if it means loss of profit.

A: Partnership for AI has all the major companies & NGOs. That is a place where you could start to socialize these best practices. But so far there isn't even best practices.

Q: Do you see the same profit-driven forces that have postdocs and grad students leave acaedmia consolidate the entire information industry into these giant unregulated utilities, and what might an individual technologist do?

Q: Consolidation: Reliance on turn-key systems trained on proprietary datasets.

A: What concerns me is that we know about all of these things because academics and journalist have turned it up in consumer-facing systems. But the vast majority of ML is happening in backend systems you never see & can't test.

A: We are dependent on systems we have no insight into and cannot see. AI NOW has made recommendations that public agencies not use systems where you can't even see how bias might be perpetuated. Need auditable systems which allow for impact statements

Q: AI doens't have the bias, the data does. How do you measure bias like that, does that require you to gather more data, ... infinite loop.

A: Don't have a short answer on how to detect bias in large-scale datasets. FAT* is where you'll find the work on that. Whittaker & I looking at how you think about data provenance.

Missed the last question -- that's it for tonight!

caught a photo of the NN diagram:

• • •

Missing some Tweet in this thread? You can try to

force a refresh