If you have an interest in #AI, #HealthTech or #PatientSafety - then please read this evidence based thread which tells the story of @DrMurphy11 & an #eHealth #AI #Chatbot.

Read on, or see single tweet summary here👇0/44

Read on, or see single tweet summary here👇0/44

https://twitter.com/DrMurphy11/status/1010815968405262336?s=19

is not a 'nerd'; he's a pretty typical NHS consultant with an interest in #PatientSafety.

On 6th Jan 2017 a tweet about an #eHealth #AI #Chatbot in #NHS trials caught his attention, so he thought he'd take a look. 1/44

Evidence here 👇

On 6th Jan 2017 a tweet about an #eHealth #AI #Chatbot in #NHS trials caught his attention, so he thought he'd take a look. 1/44

Evidence here 👇

https://twitter.com/DrMurphy11/status/817505280766443520?s=19

Dr Murphy downloaded the @babylonhealth App & tried the #Chatbot with a few simple clinical presentations. It quickly became apparent that the #Chatbot had flaws, raising the question if the App had been validated as a triage tool?

As evidenced by👇 2/44

As evidenced by👇 2/44

https://twitter.com/DrMurphy11/status/820662697788370944?s=19

The flaws in the #AI algorithm included time critical Reg Flag presentations such as #ChestPain. Worryingly, Dr Murphy identified a risk that patients suffering #HeartAttacks could be reassured & not advised to seek urgent medical help! 😲

Watch video evidence here 👇3/44

Watch video evidence here 👇3/44

Others on twitter shared Dr Murphy's concerns & questioned the robustness of the evaluation methods used in developing & testing the #AI #Chatbot. A concerned cardiologist suggested the issue should be flagged to the @CareQualityComm.

Evidenced here 👇4/44

Evidenced here 👇4/44

https://twitter.com/pash22/status/823289649229688835?s=19

Dr Murphy flagged the #AI flaws & #PatientSafety concern to Babylon, who updated the #ChestPain algorithm & offered a chat.

@babylonhealth also provided a link to an unpublished report outlining how the #Chatbot had been tested > arxiv.org/abs/1606.02041…

DM from Babylon 👇 5/44

@babylonhealth also provided a link to an unpublished report outlining how the #Chatbot had been tested > arxiv.org/abs/1606.02041…

DM from Babylon 👇 5/44

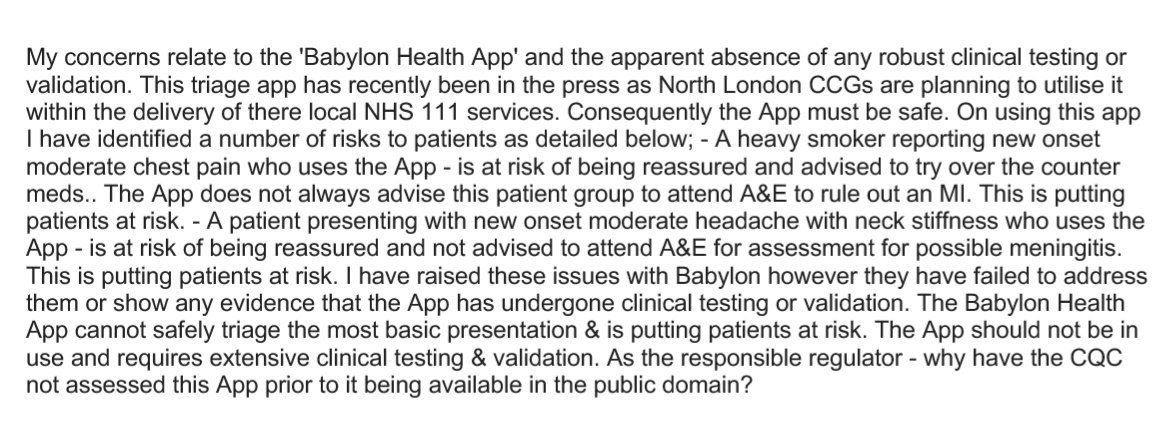

Still concerned, Dr Murphy submitted his observations to the #CQC. The CQC contacted Dr Murphy & further telephone discussion & email correspondence took place. He felt confident that the regulatory bodies would step-up.

Initial submission to CQC & follow-up email 👇6/44

Initial submission to CQC & follow-up email 👇6/44

NB - not being a nerd, Dr Murphy didn't appreciate the role of @MHRAdevices in #eHealth App regulation at this time...

The #CQC indicated that they would involve the #MHRA, & may also play a role in reviewing the App if it was being used to access the Babylon GP services. 7/44

The #CQC indicated that they would involve the #MHRA, & may also play a role in reviewing the App if it was being used to access the Babylon GP services. 7/44

The CQC were sent links to the flawed #ChestPain triage algortithm & a few others including a #Headache triage that could potentially delay the presentation of someone with #Meningitis! 😲

Evidenced here 👇 8/44

Evidenced here 👇 8/44

https://twitter.com/DrMurphy11/status/901014019645075456?s=19

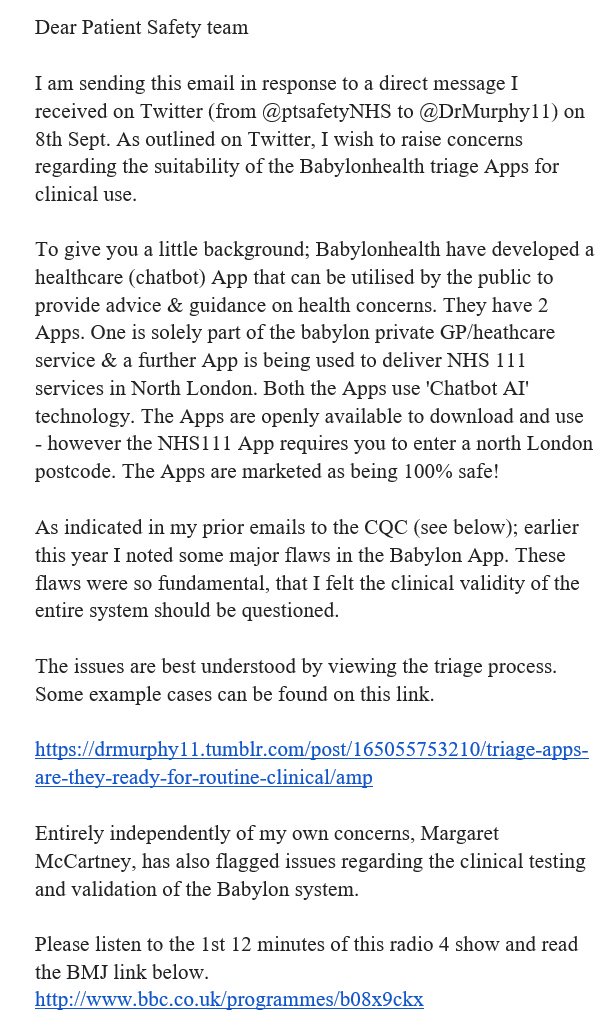

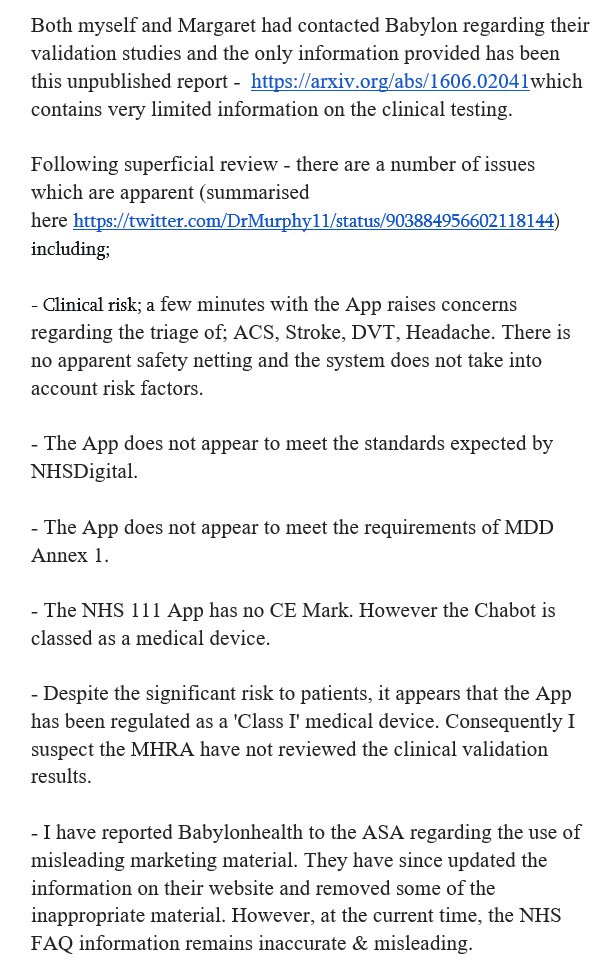

All was quiet until Sept 2017, when Dr Murphy became aware that @mgtmccartney had also questioned the evidence base for the App.

This was discussed in a BMJ article > bmj.com/content/358/bm… & on @BBCRadio4.

Listen here (1st 12mins) 👇9/44

bbc.co.uk/programmes/b08…

This was discussed in a BMJ article > bmj.com/content/358/bm… & on @BBCRadio4.

Listen here (1st 12mins) 👇9/44

bbc.co.uk/programmes/b08…

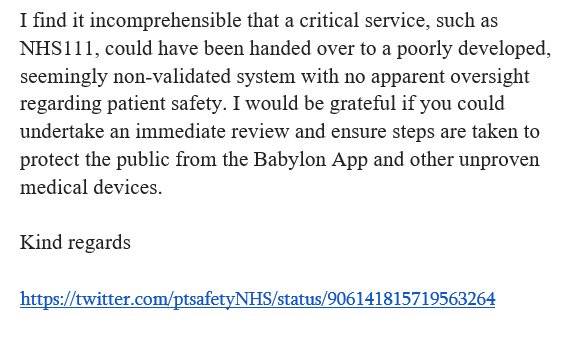

Until this point Dr Murphy hadn't appreciated that @babylonhealth were claiming the flawed #AI #Chatbot was '100% safe'! 😲

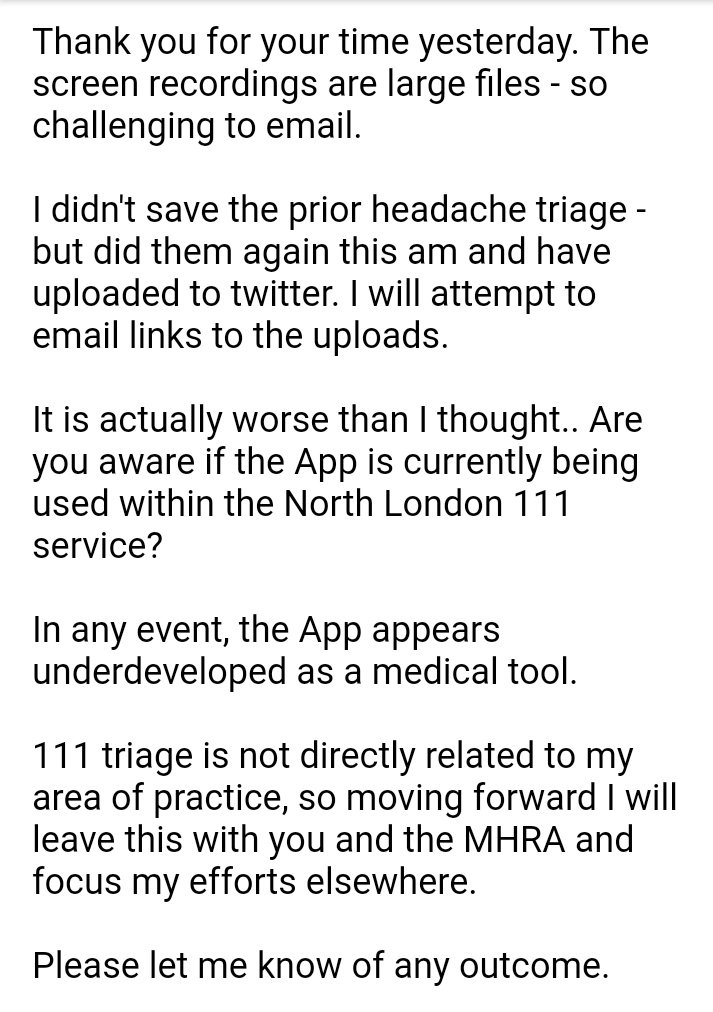

Consequently he re-contacted the CQC & also made @ptsafetyNHS aware of the #PatientSafety concerns.

Email correspondence👇10/44

Consequently he re-contacted the CQC & also made @ptsafetyNHS aware of the #PatientSafety concerns.

Email correspondence👇10/44

Dr Murphy downloaded the @babylonhealth #NHS111 App & again identified algorithm flaws that could risk #PatientSafety.

Including, time critical Reg Flag presentations such as 'referred cardiac pain'! 😲

NHS111 App triage 👇11/44

Including, time critical Reg Flag presentations such as 'referred cardiac pain'! 😲

NHS111 App triage 👇11/44

https://twitter.com/DrMurphy11/status/903884956602118144?s=19

Babylon didn't acknowledge any concerns, & wrote a dismissive response to @mgtmccartney BMJ article suggesting the App had been validated by the NHS & was found to be 'completely safe'!

Babylonhealth response to BMJ👇12/44

bmj.com/content/358/bm…

Babylonhealth response to BMJ👇12/44

bmj.com/content/358/bm…

Babylon did update their website to remove the claim that the #Chatbot had been 'independently' tested, however continued to claim that the #AI #Chatbot was '100% safe'.

Evidenced here 👇13/44

Evidenced here 👇13/44

https://twitter.com/DrMurphy11/status/907848826945503232?s=19

Many other #ClinicalGovernance concerns became apparent at this time; including the fact that the #AI #Chatbot App had bizarre T&Cs & apparently wasn't actually a #MedicalDevice 🤔

Evidenced here 👇14/44

Evidenced here 👇14/44

https://twitter.com/DrMurphy11/status/934703619164819456?s=19

It also became apparent that the regulation of #eHealth Apps was not as robust as you would expect.

Learn about Class I & II #MedicalDevice regs here👇15/44

Learn about Class I & II #MedicalDevice regs here👇15/44

https://twitter.com/DrMurphy11/status/931844724847271938?s=19

It was apparent that others had independently tested the @babylonhealth #AI #Chatbot & found that it wasn't as reliable as other #eHealth Apps on the market.

Evidenced here 👇 16/44

Evidenced here 👇 16/44

https://twitter.com/DrMurphy11/status/953396882151301120?s=19

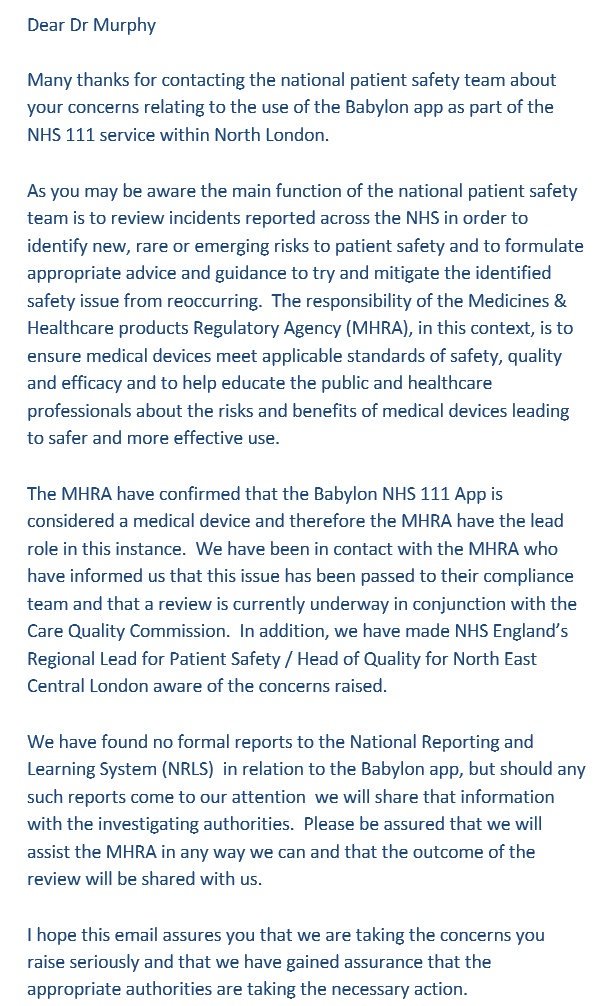

Dr Murphy received a response from the @ptsafetyNHS team - stating that the @MHRAdevices compliance team was undertaking a review of the #Chatbot.

Evidenced here 👇 17/44

Evidenced here 👇 17/44

It gets a bit confusing now as the prior '@babylonhealth App' got re-named '@GPatHand' and was used to access both #NHS GP services & #Babylon private service (as far as I can tell). The #Chatbot appeared much the same.

Evidence of confusion 👇18/44

Evidence of confusion 👇18/44

https://twitter.com/DrMurphy11/status/976708639703658496?s=19

In Mar 2018 (a year since concerns were 1st raised) fundamental flaws that risked #PatientSafety were still evident in the #AI #Chatbot algorithms.

It raised the question - can a #eHealth #Chatbot be negligent?

Flawed #Headache triage 👇

19/44

It raised the question - can a #eHealth #Chatbot be negligent?

Flawed #Headache triage 👇

19/44

https://twitter.com/DrMurphy11/status/976203106273955840?s=19

Flawed Cardiac #ChestPain triage 👇20/44

https://twitter.com/DrMurphy11/status/976839208290668547?s=19

Around this time @babylonhealth updated their website with YET ANOTHER #AI #Chatbot called #AskBabylon 😂

Dr Murphy had to put it to the test...

See questions regarding the #AskBabylon #Chatbot here 👇 22/44

Dr Murphy had to put it to the test...

See questions regarding the #AskBabylon #Chatbot here 👇 22/44

https://twitter.com/DrMurphy11/status/978739756635967488?s=19

#AskBabylon clinical test 1.

Cardiac #ChestPain triage = fail 😲

Evidenced here 👇 23/44

Cardiac #ChestPain triage = fail 😲

Evidenced here 👇 23/44

https://twitter.com/DrMurphy11/status/998680504659562501?s=19

#AskBabylon clinical test 2.

#DVT #PE triage = fail 😬

Evidenced here 👇 24/44

#DVT #PE triage = fail 😬

Evidenced here 👇 24/44

https://twitter.com/DrMurphy11/status/995346248877174786?s=19

#AskBabylon clinical test 3.

Ischemic limb = #AI #Chatbot fail

Evidenced here 👇 25/44

Ischemic limb = #AI #Chatbot fail

Evidenced here 👇 25/44

https://twitter.com/YourMrBumbles/status/1005512287334092800?s=19

#AskBabylon clinical test 4.

This was a 'suspected cancer' presentation with weight loss and fatigue, that the #AskBabylon #Chatbot diagnosed as depression!

Another #AI diagnostic fail...

Evidenced here 👇 26/44

This was a 'suspected cancer' presentation with weight loss and fatigue, that the #AskBabylon #Chatbot diagnosed as depression!

Another #AI diagnostic fail...

Evidenced here 👇 26/44

https://twitter.com/DrMurphy11/status/998640494409379841?s=19

#AskBabylon clinical test 5.

This was a barn door #PE #DVT presentation that you would expect a medical student to get right...

Sadly the #AskBabylon #AI #Chatbot failed, again 😕

Evidenced here 👇 27/44

This was a barn door #PE #DVT presentation that you would expect a medical student to get right...

Sadly the #AskBabylon #AI #Chatbot failed, again 😕

Evidenced here 👇 27/44

https://twitter.com/DrMurphy11/status/999896773052137472?s=19

#AskBabylon clinical test 6.

By this point it was clear the #AskBabylon #Chatbot wasn't very good, so I tried it with a really easy #NoseBleed.

The result, was amusing...

The now infamous #Nosebleed triage👇 28/44

By this point it was clear the #AskBabylon #Chatbot wasn't very good, so I tried it with a really easy #NoseBleed.

The result, was amusing...

The now infamous #Nosebleed triage👇 28/44

https://twitter.com/DrMurphy11/status/995727750303485952?s=19

Of concern; the #AskBabylon #Chatbot presented itself as an 'online doctor' with the statement 'I'm here to make sure you get the medical help you need'.

In reality; this was a flawed, unvalidated, non-CE Marked #MedicalDevice!

Evidenced here 👇 29/44

In reality; this was a flawed, unvalidated, non-CE Marked #MedicalDevice!

Evidenced here 👇 29/44

https://twitter.com/DrMurphy11/status/996502814355546113?s=19

To summarise; between Jan 17 & May 18, Babylon released 4 different #AI #Chatbots;

- Babylon

- Babylon NHS111

- Babylon GPatHand

- AskBabylon

IMO - flaws in the algorithms were readily evident & represented a clinical risk.

My own thoughts on why👇30/44

- Babylon

- Babylon NHS111

- Babylon GPatHand

- AskBabylon

IMO - flaws in the algorithms were readily evident & represented a clinical risk.

My own thoughts on why👇30/44

https://twitter.com/DrMurphy11/status/976929736403378176?s=19

Remember, Babylon initially promoted their #Chatbot as 'independently' tested & '100% safe'.

@ASA_UK were informed of the misleading claim & raised it with @babylonhealth. By May 18, the claim was removed from the Babylon website.

Evidenced here👇31/44

@ASA_UK were informed of the misleading claim & raised it with @babylonhealth. By May 18, the claim was removed from the Babylon website.

Evidenced here👇31/44

https://twitter.com/DrMurphy11/status/1003028412615811073?s=19

Babylon have a tendency to look for a #PositiveSpin.

For example, they didn't like a recent @CareQualityComm report & tried to block its publication.

However, their website comments that they 'passed with flying colours'

Evidenced here👇32/44

For example, they didn't like a recent @CareQualityComm report & tried to block its publication.

However, their website comments that they 'passed with flying colours'

Evidenced here👇32/44

https://twitter.com/DrMurphy11/status/1007392012046356482?s=19

The evidence thus far, strongly suggests that Babylon marketed flawed, inadequately tested #eHealth #AI #Chatbots.

It's therefore worth considering; how did they meet the #PatientSafety requirements of @NHSDigital?

Possibly because of this?👇33/44

It's therefore worth considering; how did they meet the #PatientSafety requirements of @NHSDigital?

Possibly because of this?👇33/44

https://twitter.com/DrMurphy11/status/1006289586467803136?s=19

But why would a cutting edge healthcare company promote flawed, inadequately tested #AI #HealthTech as '100% safe'?

IMO;

- The regulations allowed them to

- They thought no one would check

- Corporate culture & conflicts

#ClinicalGovernance👇34/44

IMO;

- The regulations allowed them to

- They thought no one would check

- Corporate culture & conflicts

#ClinicalGovernance👇34/44

https://twitter.com/DrMurphy11/status/1007753144808460288?s=19

But surely #MedicalDevice regulations, ensure that all #HealthTech is safe?

> Nope.

Currently class I devices (most #eHealth Apps) are not assessed or approved by @MHRAdevices.

Companies self certify; without external scrutiny

The Regs don't work👇35/44

> Nope.

Currently class I devices (most #eHealth Apps) are not assessed or approved by @MHRAdevices.

Companies self certify; without external scrutiny

The Regs don't work👇35/44

https://twitter.com/DGwork1/status/987284660815319040?s=19

New #MedicalDevice regulations come into force in May 2020. It is expected that most #eHealth Apps will require Class IIa 'approval' (not self certification).

You may have noticed, Dr Murphy has become a (reluctant) #eHealth Regs nerd 🤓

New Regs👇36/44

pharmatimes.com/magazine/2018/…

You may have noticed, Dr Murphy has become a (reluctant) #eHealth Regs nerd 🤓

New Regs👇36/44

pharmatimes.com/magazine/2018/…

Getting back to Babylon. In June 18 the @FT ran a story about a partnership with @SamsungUK @SamsungHealth which described - 'AI-powered consultations'.

Given my experience with the Babylon #AI #Chatbots, I questioned that description.

The FT 👇37/44

Given my experience with the Babylon #AI #Chatbots, I questioned that description.

The FT 👇37/44

https://twitter.com/DrMurphy11/status/1003181332518797312?s=19

You may recall that #PatientSafety concerns regarding the #Chatbot were first flagged to the @CareQualityComm in Feb 2017, yet more than a year later...

There was still no outcome 😕

April 18 CQC email response👇 38/44

There was still no outcome 😕

April 18 CQC email response👇 38/44

Shortly after the @ft article; @HSJnews ran a story about 'safety concerns' that had been flagged to the CQC, in relation to the Babylon symptom checker #AI #Chatbot.

Yet again, the response from @babylonhealth was dismissive...

See 7 tweet thread👇39/44

Yet again, the response from @babylonhealth was dismissive...

See 7 tweet thread👇39/44

https://twitter.com/HSJEditor/status/1006079295452794880?s=19

Babylon said: “A number of vested interests would like to see us fail, & often resort to anonymous attacks & false allegations to do so. Some claim to be doctors & are deliberately misrepresenting information, which raises serious probity & professional standards issues.”

👇40/44

👇40/44

Dr Murphy wasn't happy with the statement from @babylonhealth, as it suggested;

1. @DrMurphy11 was a bogus doctor with probity issues 🙄

2. The allegations of compliants/concerns were false.

3. The #Chatbot had undergone rigorous testing.

🤨👇41/44

1. @DrMurphy11 was a bogus doctor with probity issues 🙄

2. The allegations of compliants/concerns were false.

3. The #Chatbot had undergone rigorous testing.

🤨👇41/44

https://twitter.com/DrMurphy11/status/1006238915387363328?s=19

Despite the claims of 'transparency' - #Babylon / #GPatHand have failed to engage in open debate & questions remain unanswered.

In particular; if @HSJnews is correct, it appears the #Chatbot wasn't CE Marked when issues were raised in Feb 17.

? 👇42/44

In particular; if @HSJnews is correct, it appears the #Chatbot wasn't CE Marked when issues were raised in Feb 17.

? 👇42/44

https://twitter.com/DrMurphy11/status/1011661491223912449?s=19

To summarise this thread.

IMO; the Babylon #AI #Chatbot provides a good example of how NOT to do #HealthTech.

- Fundamental flaws

- Misplaced confidence in system

- No validation

- Confusing T&Cs

- Misleading marketing

- Dismissive of criticism

👇43/44

IMO; the Babylon #AI #Chatbot provides a good example of how NOT to do #HealthTech.

- Fundamental flaws

- Misplaced confidence in system

- No validation

- Confusing T&Cs

- Misleading marketing

- Dismissive of criticism

👇43/44

https://twitter.com/DrMurphy11/status/979085411644575744

I hope the thread highlights the critical importance of #ClinicalGovernance & #eHealth #PatientSafety, & will help stimulate wider debate within the #HealthTech sector.

& PLEASE, drop the hype...

Usual T&Cs apply 👇 44/44

& PLEASE, drop the hype...

Usual T&Cs apply 👇 44/44

https://twitter.com/DrMurphy11/status/976730144722378757?s=19

• • •

Missing some Tweet in this thread? You can try to

force a refresh