1/ What are #deepfakes + #AI generated synthetic media; what threats do they pose to #humanrights, #journalism and #publicsphere? What should we fear? What can we do? Thread w/ key tech + usages from recent expert mtg summarizing blog.witness.org/2018/07/deepfa…

2/We've had fake images and CGI for years, so what's different now (beyond enabling us to transplant Nicolas Cage's face to other people). The ease of making #deepfakes is captured by the vlogger Tom Scott

3/ = Barriers to entry to manipulate audio and video are falling - costs less, needs less tech expertise, uses open-source code + cloud computing power. Plus, sophistication of manipulation of social media spaces by bad actors makes it easier to weaponize and to micro-target.

4/Leads to increasing possibility of REALITY EDITS: removing or adding into photos and videos in a way that challenges our ability to document reality and preserve the evidentiary value of images, and enhances the ability of perpetrators to challenge the truth of rights abuses.

5/More ability to create CREDIBLE DOPPELGANGERS of real people that enhance the ability to manipulate public or individuals to commit rights abuses or to incite violence or conflict. Could be Trump, Aung San Suu Kyi or your local politician.

6/Greater potential for NEWS REMIXING that exploits peripheral cues of credibility and the rapid news cycle to disrupt and change public narratives. For a pre-deepfakes e.g. see this recently from Moldova: medium.com/dfrlab/electio…

7/PLAUSIBLE DENIABILITY for perpetrators to reflexively claim “That’s a deepfake” around incriminating footage or taken further, to dismiss any contested information as another form of fake news... 'It's all fake news, don't believe anything you see'

8/FLOODS OF FALSEHOOD created via computational propaganda and individualized micro-targeting, contribute to disrupting the remaining public sphere and to overwhelming fact-finding and verification approaches. blog.witness.org/2018/07/deepfa…

9/ It matters to push back vs this - @witnessorg we know critical value of + people able to share videos/ images 2 expose injustice, share powerful civic storytelling, mobilize movements. We need to defend that and fight evolving probs of image manipulation + image-based violence

10/ So we convened an expert meeting to get proactive on solutions around #deepfakes and #AI generated media. You can read the full-report out here: witness.mediafire.com/file/q5juw7dc3…

11/ First question. What are talking about here? What are new and improved tools for audio and video manipulation and creation (often based on #deeplearning, #GANs and other ML approaches) that have malicious uses?

12/INDIVIDUALIZED SIMULATED AUDIO: Enhanced ability to simulate individuals’ voices as developed and available commercially via providers like @Lyrebird or Baidu DeepVoice. Can be used for good - e.g. projectrevoice.org and for creativity, but also 4 malicious impersonation

13/ Emerging consumer tools that make it easier to SELECTIVELY EDIT, DELETE OR CHANGE FOREGROUND OR BACKGROUND elements in video. Concepts such as Adobe Cloak advance image editing currently available in tools like Photoshop or Premiere for +seamless editing of elements in video.

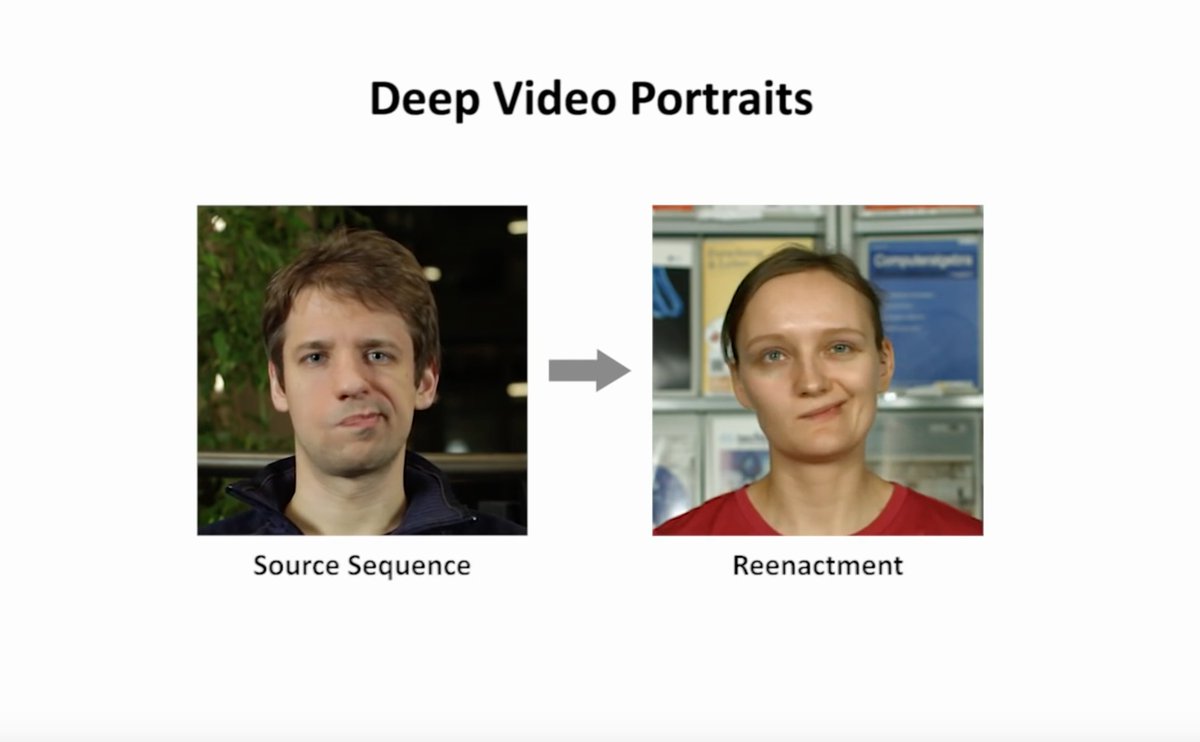

14/ FACIAL RE-ENACTMENT: Using images of real people as “puppets” and manipulating faces, expressions and upper body movements. Face2Face, Deep Video Portraits allow transfer of facial/upper body movements of 1 person to another person’s face/upper body. web.stanford.edu/~zollhoef/pape…

15/ Realistic FACIAL RECONSTRUCTION AND LIPSYNC created around existing audio tracks of a person as seen for example with the LipSync Obama project. ted.com/talks/supasorn…

16/ Real people with EXCHANGE OF ONE REGION, TYPICALLY FACE: As with deepfakes created via FakeApp or FaceSwap; also relates to tech in tools like Snapchat with a simulation of the face of 1 person imposed over the face of another person or in which a hybrid face is produced

17/ Combinations such as a DEEPFAKE MATCHED WITH AUDIO (FAKE OR REAL) AND RETOUCHING , e.g. the Obama-Jordan Peele video in which the actor-director Jordan Peele made a realistic Obama say words that Peele himself was saying.

18/ We're now basically entering arms race between manual/automatic synthesis of media, vs. manual and automatic forensic/detection approaches. Manual synthesis includes much of the innovation in CGI in recent years; automatic synthesis includes many of the tools shared here

19/Manual forensics is rich field of practice drawing on computer vision/signal processing - e.g. includes explicit checks of perspectival geometry, lighting, shadows and ‘physics’ of images, + detecting copying and splicing btw images or evidence of camera model for photo

20/ Automatic forensics often builds on similar tools as 4 creation of fakes incl. neural networks, #deeplearning, ML. Including detect copy splice or different camera models; heat maps of fake pixels from deepfake; identifying background sources of elements of generated images

21/ + Use of neural networks to detect physiological inconsistencies in synthetic media, e.g absence of blinking (arxiv.org/abs/1806.02877) or use of GANs to detect fake images based on training data of images created using existing tools e.g. FaceForensics

22/ BUT automatics systems are trained on specific databases, and might detect mainly the inconsistencies of specific synthesis techniques, and OH DEAR... counter-forensics can use same tools (e.g. GANs) to wipe or fake forensic traces of cameras

23/Researchers disagree if “arms race” will be won by forgers or detectors. Humans are not good at detecting difference btw real and fake but w/ sufficient training data could automatic detectors keep up with generators? We'll see... Next thread on solutions/recommendations.

Your own prognosis on whether detection will keep up with fakery? (noting that detection of course is only the start of addressing the malicious use)

And here's THREAD on potential solutions

https://twitter.com/SamGregory/status/1024487193170112517?s=19

• • •

Missing some Tweet in this thread? You can try to

force a refresh